The Basic Reasoning Test That Separates Real Intelligence from AI

5 Nov 2025

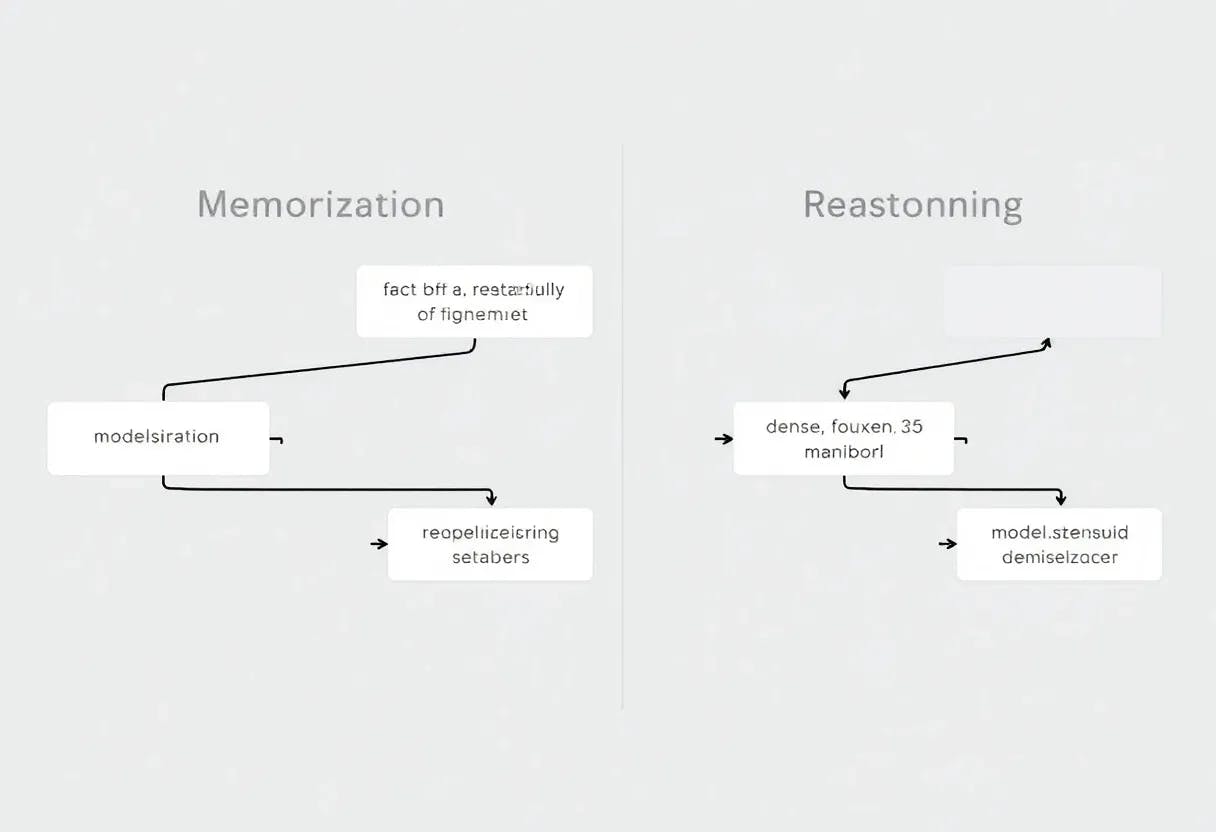

Theoretically Turing-complete neural nets may handle any problem, practical models like as GPT struggle with basic reasoning tasks that call for multi-steps.

Research from Apple and EPFL Explains Why AI Models Can’t Truly “Reason” Yet

5 Nov 2025

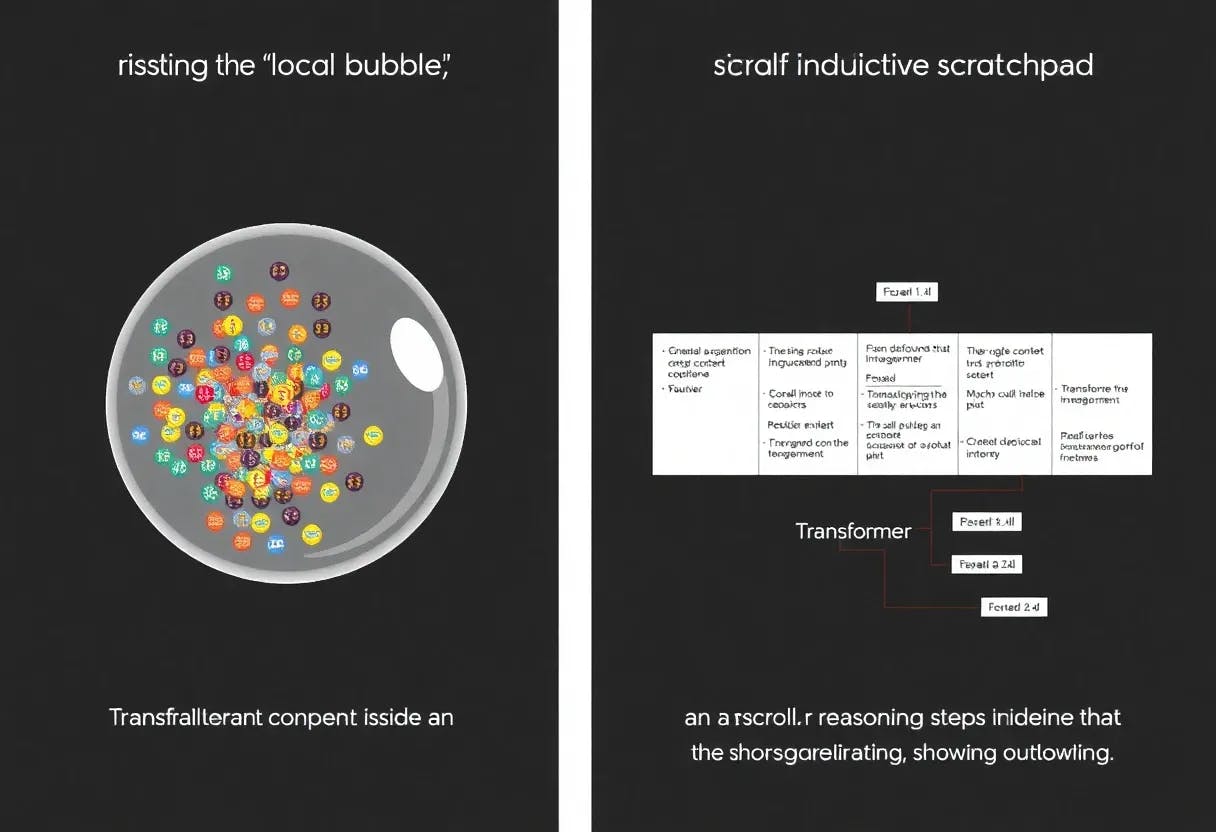

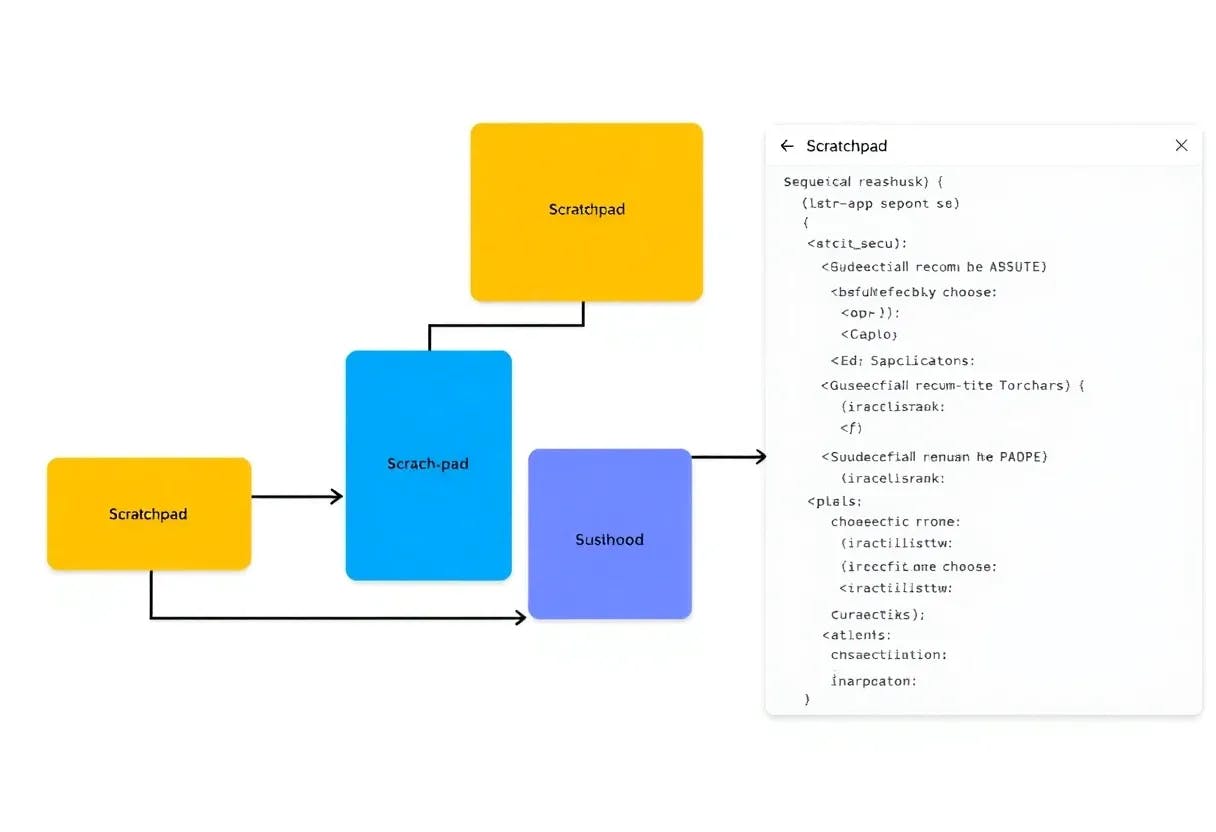

Even with a "agnostic scratchpad," transformers are unable to learn high-locality tasks unless the scratchpad is led explicitly.

Overcoming Locality in Auto-Regressive Transformers

5 Nov 2025

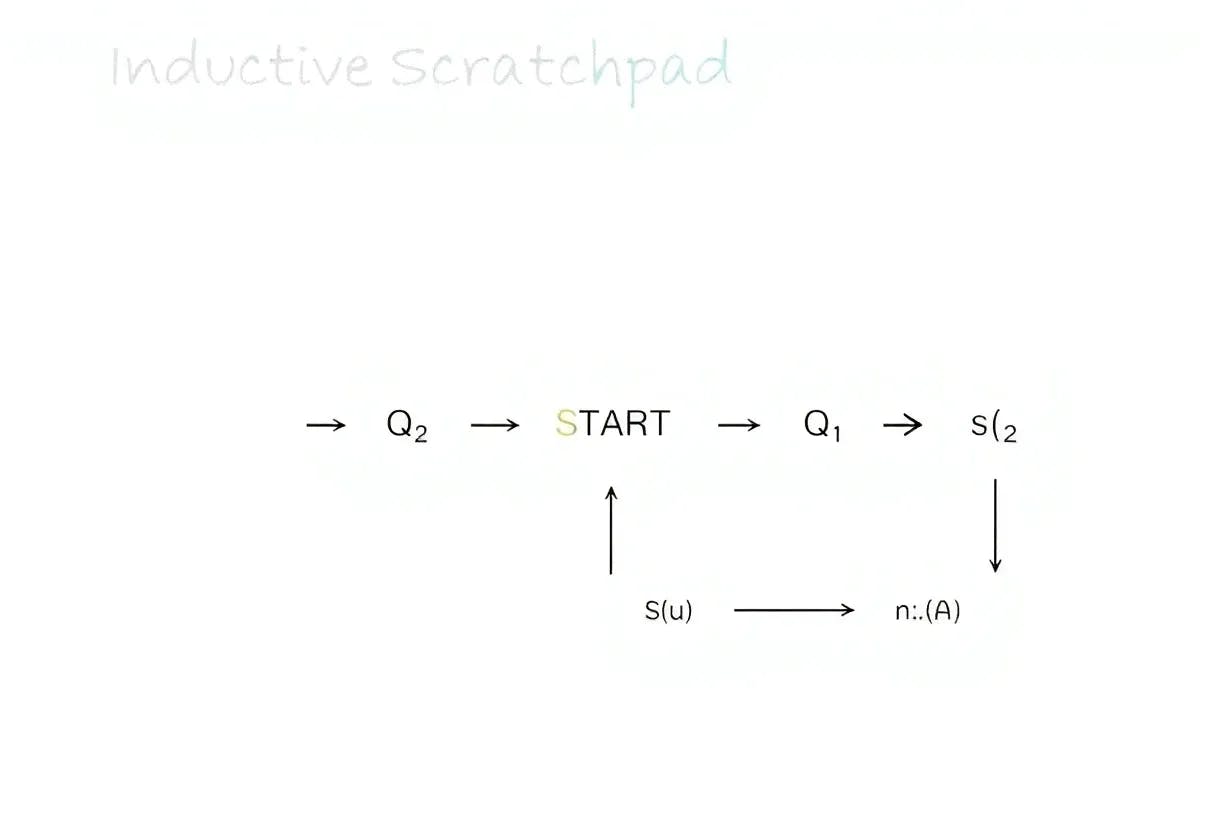

Using specific focus masks, researchers created inductive scratchpads that let Transformers acquire recursive reasoning, allowing for length generalizations.

Does Progressive Training Improve Neural Network Reasoning Ability?

5 Nov 2025

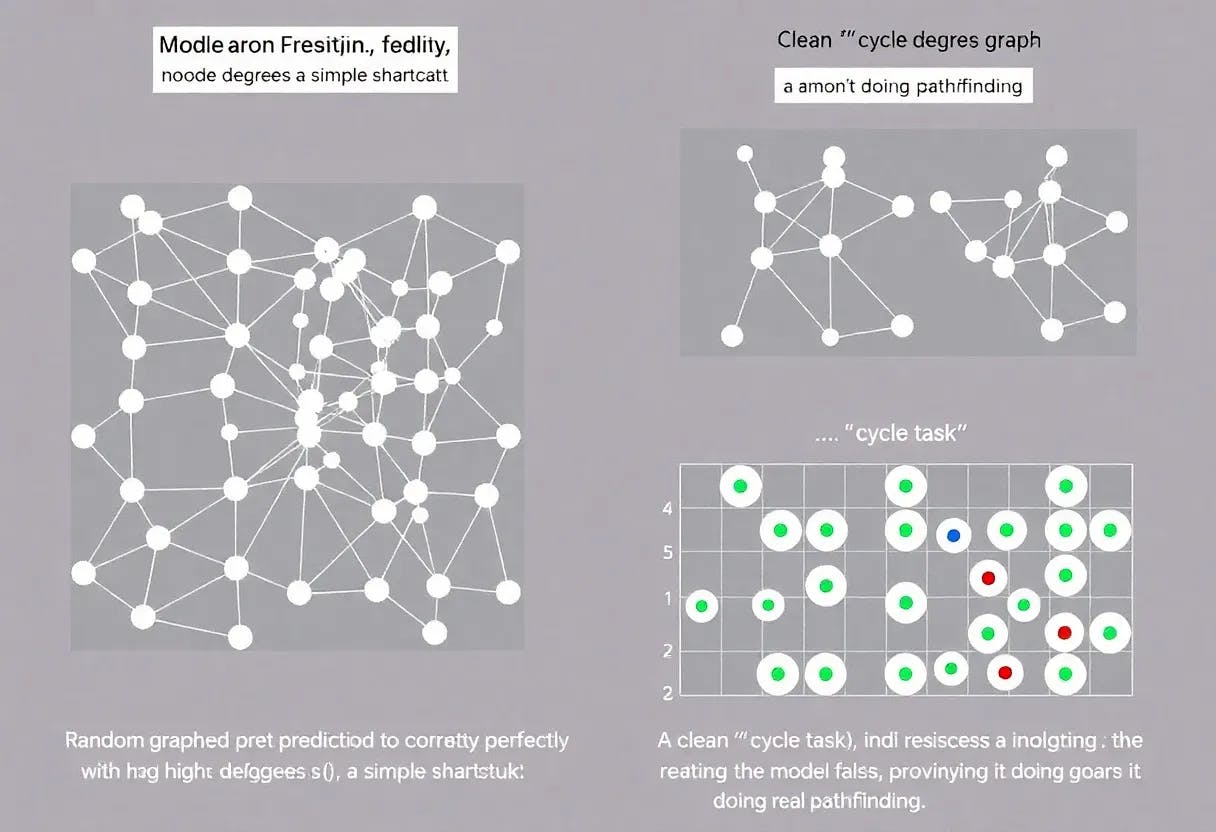

Transformers rely on statistical cuts like node degrees for path-finding when faced with random graphs, showing their inability to gain true graph reasoning.

The Science of Reasoning in Large Language Models

5 Nov 2025

The logic of Transformers is limited by "distribution locality." True reasoning loses out when outputs rely on global input structure.

The Training Technique That Teaches AI to Think, Not Memorize

3 Nov 2025

Inductive scratchpads enable transformers to effectively generalize algorithmic reasoning, which overcomes the local reasoning barrier.

How Inductive Scratchpads Help Transformers Learn Beyond Their Training Data

3 Nov 2025

Transformers can generalize to larger thinking processes and transcend local reasoning limitations thanks to scratchpads.

Understanding the Local Reasoning Barrier in Transformer Models

3 Nov 2025

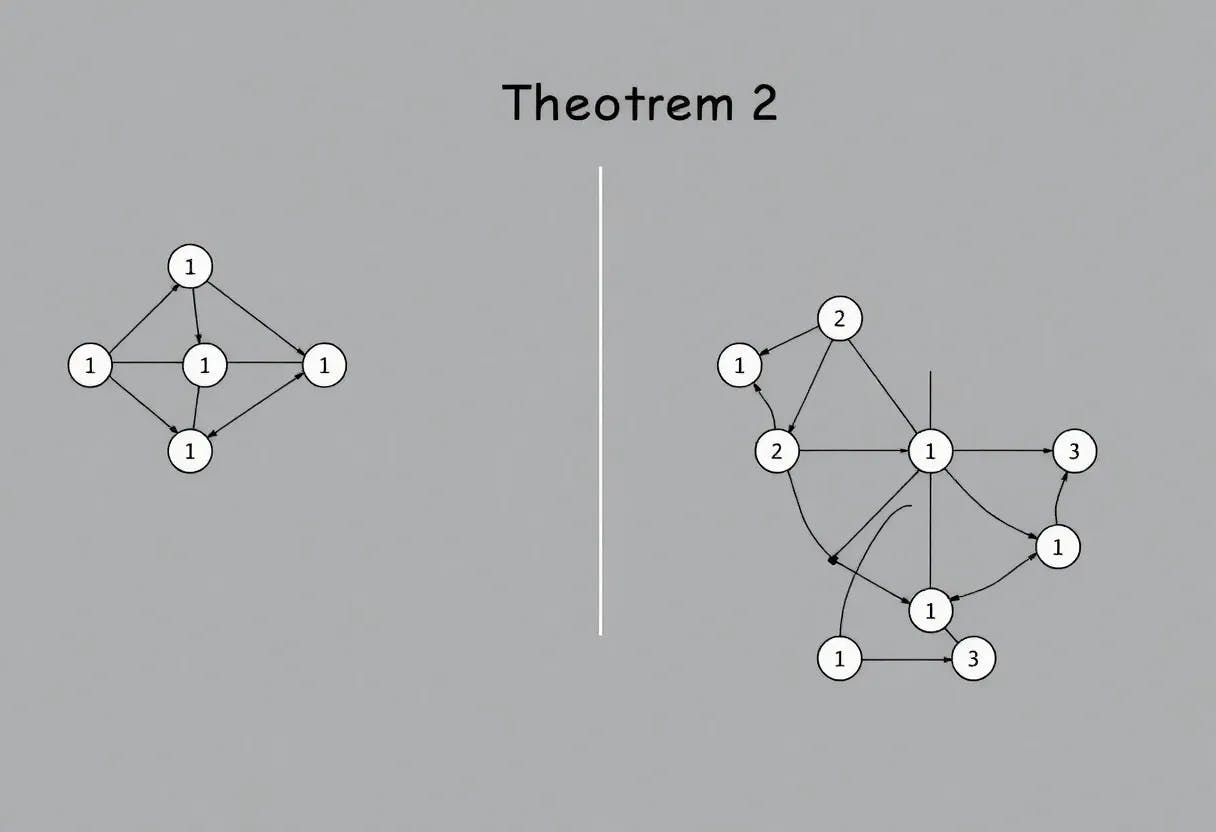

High data distribution locality restrictions make it difficult for transformers to learn tasks that require global thinking.

Why Transformers Struggle with Global Reasoning

3 Nov 2025

A fundamental "local reasoning barrier" prevents transformers from performing global thinking and constructing logical inferences.